In a cluster spanning multiple geographical regions, Global Tables let database clients in any region read data with region-local latencies. Imagine a table with data that is read from all over the world: say, for example, a table of exchange rates in a global bank’s database. Queries ran on behalf of users anywhere might access these rates. This is in contrast to other types of data (say, a user’s account data) which have an affinity to the user’s own region. Global Tables make accessing these exchange rates fast.

More specifically, these tables let clients in any region perform non-stale (sometimes called strongly consistent) reads of data with region-local latencies. This means that local reads from all regions always serve the latest version of the data, never a potentially-stale copy.

On top of this, Global Tables use time delays to resolve conflicts between readers and concurrent writers so that readers rarely block on writers. Combined, this means low latency reads in both the common case and also in the tail. This is great for read-heavy data.

But the lunch isn’t free - writes to Global Tables are more expensive than writes to regular tables in terms of latency. Thus, the feature is useful primarily for data that is read much more frequently than written.

Global Tables were introduced about one year ago, in CockroachDB version 21.1. We’re proud of this feature both because it helps solve an important problem for our more sophisticated users, and also because the design and implementation of the feature are novel in some ways. For example, as we’ll see, the synchronization required for making sure that no replica serves “stale” data is done implicitly, through the passage of time (i.e. through semi-synchronized clocks), rather than through the more usual mechanisms of locks and network communication. This design has good latency properties in the face of read/write contention and good availability properties in the face of region failure.

Global Tables represent a new point in the multi-dimensional space of trade-offs between read latency, write latency, linearizability of reads, failure tolerance, data partitioning, and storage cost.

Before we introduced Global Tables, users of CockroachDB (as well as many other databases) had a few options when it came to managing their data in a multi-region cluster, organized mostly around partitioning data such that different clients get low-latency access to different partitions. The problem with partitioning is that it doesn’t apply to “non-localized” data (data that does not have an affinity to a specific region). Global Tables make different tradeoffs that are more appropriate for non-localized data; by letting writes to infrequently written data perform more slowly, they give all clients low and predictable latency reads.

CockroachDB Context

We are following up on our previous work on follower reads; we have written about here and we recommend the reader to skim that previous blog post for context.

In brief, CockroachDB splits data into 512MB “ranges”. Each range is replicated at least 3 ways and the range’s replicas form a Raft consensus group. Writes need to be acknowledged by a majority of replicas before being applied.

At any point in time, one replica acts as the “leaseholder” for the range; the leaseholder needs to be part of all the write quorums and it is the only replica that can serve strongly consistent reads. The other replicas can only serve historical, stale reads. Thus, from a client’s perspective, the latency of a read is dictated by the round-trip time to the leaseholder of the range being read. The latency of a write depends on the round-trip time to the leaseholder plus the time it takes to achieve consensus for that write. The consensus latency is on the order of a round-trip from the leaseholder to the closest majority of replicas. This is a major simplification of what happens for the complex SQL transactions that CockroachDB runs, but it provides a useful mental model. In particular, it shows that the latency to the leaseholder and the quorum latency matter.

In a multi-region CockroachDB cluster, minimizing the latency of data access is the name of the game.

As we’ve explained in our previous blog post on multi-region SQL, one way CockroachDB does this is by allowing administrators to configure the location of leaseholders and quorums. This can be done at the schema level: SQL tables in multi-region databases have a locality setting. The default option, REGIONAL locality, allows the admin to specify one region where the leaseholders of all the ranges containing table data are to be located. A quorum of replicas are spread around the leaseholder depending on the table’s survival goals. By default, tables are configured to survive the loss of one availability zone within a region (SURVIVE ZONE FAILURE); in this case, all the replicas are spread between availability zones in the leaseholder region. Tables can also be configured to survive the failure of a whole region (SURVIVE REGION FAILURE), in which case replicas are spread to other regions.

CockroachDB also makes it possible to optimize individual rows within a table for being accessed from different regions. A table can be configured with the REGIONAL BY ROW locality, in which case each row gets a hidden region column that controls which geographical region gets fast access to that respective row. Technically, the table and all its indexes are partitioned on the region column, and the leaseholders in different partitions are pinned to different locations.

When data has a natural affinity to a region, the REGIONAL and REGIONAL BY ROW tables serve our latency goals well. Data that doesn’t have a particular affinity - data that’s commonly accessed from multiple regions - is more problematic.

When these accesses are reads that don’t need strong consistency (i.e. reads that don’t necessarily need to see the absolute freshest data), CockroachDB allows all regions to serve them: by default, all tables have non-voting replicas in all regions (replication to non-voters is asynchronous), and these replicas can serve snapshots of the data that are a few seconds old. We call reads served by non-leaseholder replicas “follower reads”. CockroachDB supports two types of stale reads: exact staleness reads, through the SELECT … AS OF SYSTEM TIME now() - ‘5s’ syntax, and bounded staleness reads through the SELECT … AS OF SYSTEM TIME with_max_staleness('10s') syntax.

Stale follower reads are an important tool, but they can’t always be used. In particular, they can’t be used in read-write transactions; these transactions need to perform consistent reads, otherwise they run into trouble with serializable transaction isolation. This section of our follower reads blog post goes into more detail about when stale reads can and cannot be used.

When data doesn’t have a particular read affinity and also doesn’t tolerate stale reads, then Global Tables can help.

Fast Consistent Global Reads: Taming the Contention Footprint

Our overall goal with Global Tables is to serve consistent reads from many (or all) of a range’s replicas, not just from the leaseholder. We want each replica to serve reads without needing to coordinate with any other replicas.

When designing our Global Tables, we studied several options. The consideration that ultimately led us to the chosen implementation was the behavior of reads when they encounter read/write contention—i.e. when they run into a writing transaction that is writing to the same row.

Contention is frequently the cause of high tail latency for reads, and we wanted Global Tables to provide predictable latency even in the face of contention. We’ll explore below how these tables work, starting with a simplified model and then coming back to CockroachDB details.

First, let’s expand on what we mean by “consistent reads”: a read is consistent if it returns the latest committed data. Another word for “consistency” in this context is “linearizability”, which means that all the reads and writes need to behave as if they were executed one at a time, in a sequential order consistent with real-time (i.e. reads need to “see” all the writes that finished before the read started). When a replicated system is linearizable, it intuitively behaves like a logically-equivalent unreplicated system: clients cannot observe any effects of replication. In particular, they cannot see “stale data” if they happen to query a replica that is not up to date with the latest writes. This was discussed at some length in a our previous post about the CockroachDB consistency model.

A Replicated Register Synchronizing Through Communication

Let’s try to build a replicated but linearizable system to get intuition about some of the difficulties. We’ll talk abstractly about the simplest system of all: a single register accepting reads and writes. In later sections, we’ll translate this to a much more complex transactional database. So, we have a replicated register and we want all replicas to be able to serve reads. For simplicity, we’ll accept that a single one of the replicas can accept writes; we’ll call this replica the leader. We’ll also accept that our system is not fault tolerant: the failure of any replica will cause writes to block (although in practice this would be a debilitating property).

Since we want every replica to serve consistent reads without communicating with other replicas, it seems clear that all writes need to be communicated to every replica. So, the leader has to broadcast every write. Let’s consider what could happen if this broadcast were done in a “fire and forget” manner - i.e. if the leader, and any other replica, would start serving a write as soon as it becomes aware of it.

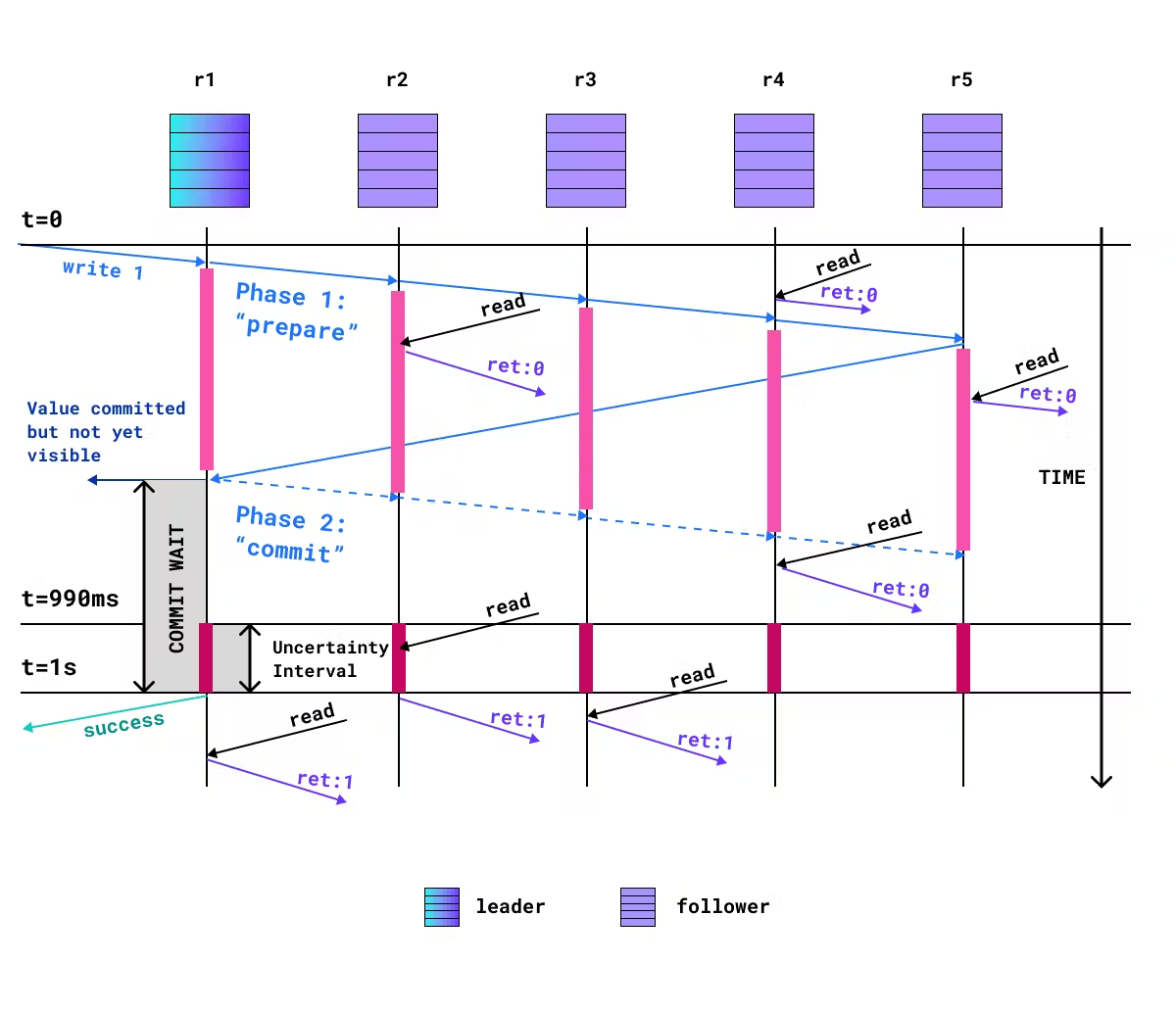

This diagram shows the hazard. Assume the register starts with storing value 0, and then a client writes 1 into it. Imagine the replicas ordered according to the latency that the broadcast from the leader incurs before reaching them. The timeline makes it apparent that different replicas would start serving the new value 1 at different times, which opens the door for a read being served by r1 to return 1 before a read served by r4 returns 0. The second read is “inconsistent”; our replicated register does not exhibit linearizable behavior. Jumping to the real world, this might mean that I make a bank transfer to you, then call you on the phone to ask you to check your account, and when you check the money appears to not be there.

Okay, so this simple scheme doesn’t work. Somehow we need to make writing to our register “atomic” across all the replicas: there needs to exist a point in time before which all reads return the old value and after which all reads return the new value. This suggests that we need some protocol through which all replicas agree on a new value before any replicas return that new value.

A simple such agreement, or “consensus” algorithm, is Two-Phase Commit (2PC). In 2PC, the leader first proposes (“prepares”) the new value to all the replicas and, once it has confirmed that everybody acknowledged the proposal, it broadcasts a “commit” message. Once a replica has heard about a proposal, it cannot serve either the old value or the new value: it can’t serve the old one because it can’t assume that the others haven’t started serving the new one, and it can’t serve the new one because it can’t assume that the others have stopped serving the old one.

Essentially, a prepared value on a replica acts as a local lock, blocking other accesses. Once the broadcast of the commit message reaches a replica, that replica can start serving the new value: the replica knows that nobody will ever serve the old value again; the lock is released.

This diagram shows the periods of time that different replicas are locked, in red. Reads arriving during these periods have to block. The point labeled “success” on the diagram represents the “linearization point” of the write: all reads that returned before that point have returned the old value and all reads that return from now on will return the new value. Some reads that started after the write started might have returned the old value (like the first read from r4 on the diagram), others blocked on locks and took a while to return the new value (like the second read from r4); all these reads were concurrent with the write, “racing” with it, and so are free to return either the old or the new value without violating linearizability (or, generally, without violating most people’s intuition about how a register should behave).

This consensus scheme works in achieving our consistency goals. It has major fault tolerance issues that we’ll ignore. We’ll focus on the read latency issues, though. You can visually tell that each “lock” is held for a duration equal to the round-trip time between the leader and the furthest replica. In a multi-region setting, this can be hundreds of milliseconds. Our register is using communication between replicas to coordinate the acquisition and releasing of locks, and that is fundamentally slow across a wide-area network.

In addition, here we’ve discussed the cost of committing a value in a very simple system, where the only latency at play is the commit, or consensus, latency. In an interactive, transactional database like CockroachDB, different types of locks can be held for longer - for example, for the whole duration of a transaction, which includes interactions with the application code. We call the duration of locks the “contention footprint” of a write (or, more generally, of a writing transaction).

A Replicated Register Synchronizing Through Clocks

Since there are fundamental reasons why locks need to be held for the duration of communication, we’ve tried to come up with a design whereby reads don’t need to encounter these locks in the happy path. One thing we can do is schedule writes to become visible at some point in the future such that the writer has enough time to release its locks before the scheduled timer fires. For example, the leader can assign a future timestamp to each write (say, one second in the future). Each replica would interpret that timestamp as the time when the write is supposed to become visible. The replicas could then essentially use their local clocks to synchronize implicitly with one another so that they all start returning the new value at the same time.

There are two problems with this scheme that we’ll have to work through:

Violations of read-your-writes.

Clock skew.

If we were to directly implement this scheme, a client performing a write would receive a successful response and then fail to read the value that it just wrote because the value is still only scheduled to become visible later. This would be a violation of both linearizability and common sense.

We can address this problem by having the leader wait until the value’s timer expires (and thus the value becomes globally visible) before returning to the client. We call this wait period “commit-wait”. Readers may recognize that Google’s Spanner has a similar concept with the same name. This means that the writing client has to wait one second before it receives the write’s acknowledgment. That’s a big latency cost for writers, but it comes with the benefit that readers do not block. Note that the commit-wait overlaps with the communication latency for achieving consensus on the write.

The second problem is that the clocks used by different replicas are not in perfect sync. A replica with a fast clock could start returning the new value before a replica with a slow clock. We can deal with this by making an assumption about the maximum clock skew between any two replicas. It’s generally possible to synchronize clocks within a couple of milliseconds using NTP or similar software methods, or below one millisecond by using atomic or GPS clocks. Note that these numbers are significantly better than the latency of communication. Let’s assume a maximum clock skew of 10ms.

To tolerate skew, we introduce the notion of an “uncertainty interval” for our readers: from the perspective of a reader, we consider values that are scheduled to become visible in the next 10ms as “uncertain” - we can’t be sure that another replica does not consider them to be visible already. When encountering an uncertain value, a reader can wait until the local clock reaches the value’s timestamp. At that point, the reader can be certain that all other replicas are either considering the value to be visible, or at least uncertain. In either case, no future read will see the old value (this is sometimes called the “monotonic reads” property).

What we’ve achieved here is that reads only ever wait for up to the maximum clock skew when coordinating with concurrent writes. They don’t need to wait for slow and highly variable wide-area network communication to synchronize with the visibility of the latest write. This strategy can reduce the tail latency of consistent reads from hundreds of milliseconds to single-digit milliseconds.

The diagram above is showing that reads generally do not block on communication. Reads happening during the time when the value “1” is replicating will return the previous value. Even reads happening after “1” has finished replicating still return the previous value for a while. Reads start blocking only when the new value enters the uncertainty interval of each replica’s clock do reads start blocking - and they only block for up to the tolerated clock skew.

Global Tables in CockroachDB

Coming back to CockroachDB, Global Tables provide low-latency, strongly consistent reads from all replicas using the idea sketched in the previous section. Because these are strongly consistent reads, they can be used in read-write transactions (as opposed to stale reads). When reading from a Global Table, the read can generally be served from any replica (in particular, from a replica that’s located geographically close to the SQL client performing the read), rather than having to be served by the leaseholders of the data ranges being read.

The obvious benefit of letting all replicas serve strong reads is that, if a client is located in the same region as a replica, it gets local-region latency reads. A second benefit is the load-balancing aspect through horizontal scaling: the leaseholder is no longer a bottleneck for high-read-throughput ranges.

A table is configured as “global” by setting its locality to GLOBAL: ALTER TABLE foo SET LOCALITY GLOBAL. This causes non-voting replicas to be placed in every region that does not have voting replicas, so that all regions are able to serve follower reads. Then, the stars are aligned such that transactions writing to the ranges containing the data for the table and its indexes operate “in the future”, as we’ll detail below. As a tradeoff, writes on these tables are slower.

Global Tables fit seamlessly into CockroachDB, and they compose spectacularly with other features. For example, it’s common to have a “reference table” that contains mostly-static data that is referenced by other child tables. In many cases, the reference table doesn’t have location affinity, and so it can be a Global Table, even when the other tables referencing it are partitioned. Foreign key checks performed implicitly when inserting or updating the child table will use follower reads to stay local to one region, as will joins between the child and parent.

Before CockroachDB v21.1

Before CockroachDB v21.1, one way to achieve consistent reads from multiple replicas was through the use of the so-called Duplicate Indexes Topology. The trick was to define multiple identical indexes on a table, all of them storing all the columns, and then to bind each index to a different region. This pattern had multiple issues, going from ergonomics (changing a database’s regions required changes adding or dropping indexes), to cost (each index needed to be replicated individually for fault tolerance), to tail latency (locks held by writers block readers).

The duplicate indexes idea was, in a way, a hacky way to (ab)use SQL index functionality in order to get features that can be provided more efficiently at a lower level of the stack. If you squint, the way they work is by having writes communicate with a number of replicas, such that reads can be served by any one of these replicas. The technique is similar in spirit to Quorum Leases, which have been studied academically. For Global Tables, we have gone a different way, along the lines suggested in our discussion of the replicated registry.

Coordinating through semi-synchronized clocks

CockroachDB is a Multi-Version Concurrency Control (MVCC) system, where all reads and writes are timestamped using local clocks. Writes on Global Tables operate at future MVCC timestamps. In a sense, writes are “scheduled” to take effect a few hundred milliseconds ahead of time.

When a writer attempts to write to a Global Table, it is told that the MVCC history at the present time is already set in stone. The only option it has is to write above the present time.

Readers familiar with CockroachDB will recognize this “set in stone” mechanism as the “closed timestamp”.

Closed timestamps

As described in detail in our previous blog post, “closed timestamps” are the mechanism through which a replica becomes aware that it can serve writes below a specific timestamp, as it is guaranteed to no longer receive writes below that timestamp. Thus, a closed timestamp is a promise from the range’s leaseholder to the other replicas that it will no longer evaluate writes below that timestamp. Instead, if a client attempts to perform such a write, the leaseholder will “push” the writing transaction, forcing it to write at a higher timestamp instead (footnote: pushes may lead to transaction restarts in some cases).

Closed timestamps are usually communicated through the Raft replication stream, and so they are synchronized with writes: a replica receiving a closed timestamp update knows that it has already seen all writes at lower MVCC timestamps.

Whereas closed timestamps are typically established a few seconds in the past on REGIONAL tables, closed timestamps are established a few hundred milliseconds in the future on Global Tables. By moving this lever forward, Global Tables orchestrate for MVCC history to be closed at the present time on all replicas, allowing all replicas to serve non-stale reads using the standard follower read mechanism.

Global Tables implementation

Ensuring that all replicas consider the present time to be closed by having the leaseholder close future timestamps is the key idea; all other pieces follow from that. The closed timestamp forces writers to write at future MVCC timestamps, which is analogous to scheduling writes to take effect in the future, as we did for our toy register. Like we described there, writers perform commit-wait in order to ensure that, once their commit is acknowledged to the client, any future read will have an MVCC timestamp high enough such that it will “see” the write.

Also like we described for the register, CockroachDB uses the clock uncertainty window to ensure that, once a value is returned by a read served by replica A, it continues to be returned by reads served by any other replica (including replicas with slower clocks). CockroachDB is configured with a maximum tolerated clock skew (see: Living Without Atomic Clocks). If the maximum skew is, say, 10ms, and a read at timestamp t encounters a value with timestamp, say, t+5ms, then that reader is forced to change its timestamp to t+5ms. This implies validating all the previous reads through a refresh operation. If the t+5ms timestamp is in the future of present time, the reading transaction will also perform commit-wait, just like future-time writers. This ensures the monotonicity of reads: once this reader commits, every future transaction is guaranteed to return the write in question because the t+5ms timestamp will either be below the read timestamp of new transactions or, at the very least, will be in their uncertainty interval.

The key benefit of this scheme is that, if timestamps are closed far enough in advance of present time, then readers do not block on contending writers: neither on locks explicitly held by writers during the lifetime of their interactive transactions, nor on the locks implicitly held during the writer’s consensus round on commit. This is a big deal for the tail latencies of transaction commits. In the face of read/write contention, readers only block for commit-wait, whose duration depends on the degree of clock synchronization. Commit-wait is expected to be much quicker than communication rounds across the globe.

The amount of “lead time” (duration in advance of present time) that closed timestamps use is chosen such that by the time it becomes present-time, the transaction has likely released its locks, replication has propagated the updated data, and present hybrid logical clock (HLC) time is already closed on all replicas.

The amount of “lead time” that closed timestamps should use (i.e. how much in advance of real time should the closed timestamp be) is computed as a function of:

The maximum clock skew.

The time it takes to achieve consensus for committing a transaction.

The time it takes a committed value to replicate to all non-voting replicas.

The lifetime of a writing transaction.

The maximum clock skew needs to be taken into account because, in order for a follower to serve a read at timestamp t, it’s not enough for t to be closed; t+max_clock_offset also needs to be closed (no new writes can be allowed to appear in the read’s uncertainty window after the read has been served).

The time to achieve consensus on transaction commit needs to be taken into account because we want writing transactions to be committed before present-time catches up to their timestamp. The consensus latency is on the order of one roundtrip from the leaseholder to a quorum of voting replicas. Depending on the database’s survival goal, these replicas are either in the leaseholder’s region, or in nearby regions.

Similarly, the time it takes a committed value to propagate to all the non-voting replicas also needs to be taken into account. Once a write commits at timestamp t, a record of this commit, and also a close timestamp update for t, need to reach the furthest replica before this replica can serve reads at t.

Finally, the duration of a writing SQL transaction needs to be taken into account because we want transactions to release all their locks by the time the timestamp at which they write enters the uncertainty interval of readers (otherwise the readers would start blocking on these locks, which we’re trying to avoid). Luckily, this lifetime duration overlaps with the maximum clock skew component of the equation, so only the maximum of the two matters.

The sum of these factors controls the lead duration of closed timestamps on Global Table ranges, and that in turn translates into a bound on the commit-wait duration for writers. The commit-wait for readers is less, bounded by the maximum clock skew alone.

Availability considerations

The implications of serving strong reads from multiple replicas on the availability of the respective data deserves some discussion. The CAP theorem states that, in case of a network partition, it’s impossible to continue providing both availability and consistency of the data on both sides of the partition. In case of network partitions (and also in case of other failures), something’s got to give. In the context of CockroachDB’s implementation of Global Tables, the design decisions came to choosing between read availability everywhere vs read/write availability somewhere. We chose the latter: in case of a partition or region failure, one side of the partition will maintain read/write availability to the data whereas the other side will have neither. An alternative implementation could maintain read availability in all regions by foregoing write availability (i.e. if writes were to coordinate with all regions, then each region could continue serving strong reads even when disconnected from the rest). Preferring to maintain write availability seems like the right choice for CockroachDB for a couple of reasons:

As the number of geographical regions in clusters grows (and we’re betting it will grow), one region becoming temporarily unavailable will become more common, as will networking issues between regions. The cost of allowing the effects of a region failure to spread to the other regions seems likely to only go up in the long term.

Write availability is actually related perfidiously to read availability in CockroachDB, through our transactional write semantics. When performing writes on behalf of a transaction (and CockroachDB, besides being all about multi-region, is all about transactions) those writes hold locks on the modified data until the transaction is committed. If the transaction cannot be committed, the locks cannot be released. Committing the transaction needs write availability to a particular range among the ranges that the transaction touched. Thus, write unavailability to that range effectively means read unavailability on arbitrarily different ranges. So, by necessity, CockroachDB is also all about high (write) availability.

For a Global Table, writes to the table’s data need a quorum of replicas spanning either replicas within a region or across three regions, depending on the SURVIVE setting. In case of a network partition, clients in regions partitioned away from this quorum cannot perform reads or writes. Clients in regions with connectivity to the quorum maintain read/write availability. In case quorum is not configured to support region failure, then the failure of the quorum’s region takes away read/write availability of the data for all other regions. Failures of other regions do not impact non-failed regions.

The Duplicate Index Topology Pattern that we linked to before functions the other way, choosing read availability over write availability. This is because of happenstance, rather than design.

In conclusion to a long read about fast reads

Global Tables let database clients in any region read strongly consistent data with region-local latencies. They’re an important piece of the multi-region puzzle — providing latency characteristics that are well suited for read-mostly, non-localized data.

Global Tables compose with their counterpart, Regional Tables, which are well suited for localized data. To learn more about data homing in regional tables, check out this blog post. To see how these two table localities all tie together to create a cohesive multi-region story, continue reading with this blog post.